Overview

The State Penalties Enforcement Registry (SPER) is responsible for collecting and enforcing unpaid fines issued in Queensland. SPER's Reform Program, which began in 2014, included an information and communication technology (ICT) component that involved implementing new case-management software to help recover unpaid fines. Tabled 6 February 2020.

Auditor-General’s foreword

In my report number 1 for 2018–19, Monitoring and managing ICT projects, I noted that:

- $5.4 billion is wasted in Australia alone on projects that do not deliver benefits (INTHEBLACK 2016)

- the estimated cost of projects then underway, and reported on the Queensland ICT dashboard, was $1.3 billion

- the Queensland Government had plans to spend $2.6 billion on ICT projects over the next four years (2018–19 to 2021–22)

- 67 per cent of companies fail to terminate unsuccessful projects (Harvard Business Review, September 2011)

- 18 per cent of the projects reported on the Queensland ICT dashboard had been in the delivery phase for more than three years.

In my report I also called out the HRIS program and the MyDAS project that both went through long, drawn-out processes before delivering functional systems.

Over the last couple of years, there have also been several cancelled ICT projects in the public sector. While this report focuses on the State Penalties Enforcement Registry (SPER) ICT project, there is a need for greater disclosure by agencies when ICT projects are cancelled. There is also a need for greater oversight of ICT projects by the newly created Office of Assurance and Investment (formerly part of the Queensland Government Chief Information Office) to help mitigate the risks of project delivery.

In recent months, I received two referrals from members of parliament asking me to audit specific ICT projects that have either been cancelled or have experienced significant cost overruns and/or service delivery issues.

One of the referrals relates to the Training Management System project at the Department of Employment, Small Business and Training. The department ended this project in 2018, before the system was delivered. While I do not intend to undertake an audit of this project, I have included in Appendix D of this report a summary of factual information regarding the project.

The other referral relates to the new SAP S/4HANA implementation at Queensland Health and the hospital and health services. I am currently undertaking a preliminary inquiry with the relevant entities, particularly in relation to implementation issues; late payments to vendors; and the continuing additional cost to manage the system. Based on the outcome of the inquiry, I will decide on whether to undertake a separate audit or include factual information about the project in a report to parliament.

Given the ongoing, heightened interest in ICT projects, I plan to prepare insights reports to parliament covering significant ICT projects. The purpose of these reports will be to develop insights and to share learnings from significant projects across the public sector. The information within these reports will be over and above what departments currently report through the government’s digital projects dashboard.

The Queensland Government intends to spend $2.6 billion on ICT projects over the four years from 2018–19 to 2021–22, so it is important that lessons are learned from past projects. A focus on improving oversight and governance, and on providing transparent information on cancelled projects, will help manage the risk of project failure.

Brendan Worrall

Auditor-General

Report on a page

On 25 March 2019, the Under Treasurer wrote to the Auditor-General about concerns with the State Penalties Enforcement Registry (SPER) Reform Program, which began in May 2014. His concerns were about the delivery of the information and communication technology (ICT) component. The Auditor-General agreed to audit the effectiveness of the governance of the program’s ICT component. This report contains the results of that audit.

SPER ICT

The ICT component of the SPER Reform Program involved implementing new case‑management software to assist SPER with recovery of unpaid fines. As part of the program, SPER signed a contract with a vendor to supply and implement its existing debt collections software, with a focus on configuring, rather than customising, its product to meet SPER’s business transformation needs. The vendor was to provide the case-management software to SPER through an ongoing arrangement for software as a service (SaaS) (that is, the vendor retains ownership and SPER pays annual fees to use it).

Procuring the service

SPER originally went to market for a debt service manager who would also provide a case‑management software solution. The government policy for outsourcing changed while the procurement process was underway. SPER continued its original process to procure a case‑management software solution, but without an outsourced debt service manager.

Delayed definition of the operating model meant SPER and the vendor were not on the same page in terms of the system requirements. It also appears SPER’s requirements may have changed over time as it did its business transformation. SPER did not do sufficient due diligence of the vendor’s product or conduct reference checks on the vendor’s local staff who worked with them on the project. The vendor’s local delivery team was different from the international team involved in the procurement process.

We found weaknesses in the procurement process in terms of the independence and objectivity of the program steering committee and over-use of external consultants and contractors.

Governing the project

SPER did not have the right skills and experience to manage the project effectively. SPER did not sufficiently mitigate risks raised in assurance reviews and chose to remain overly optimistic rather than make the call to pause the project when it had the opportunity to do so.

The program steering committee was highly reliant on the advice and information provided to it by consultants and contractors, because of the skills gaps it had.

Because SPER and the vendor were not on the same page in terms of system requirements, the contract required significant changes as evidenced by the pattern of contract variations and change requests. The contract variations, in the end, increased the vendor’s revenue from the project, with an additional $10.3 million on top of the original agreed contract value for implementation of $13,780,609. SPER ended up without an ICT system because it terminated the contract and the vendor retained ownership of the software because it was a SaaS arrangement.

Introduction

The State Penalties Enforcement Registry (SPER) is part of the Office of State Revenue within Queensland Treasury. It is responsible for collecting and enforcing unpaid fines issued in Queensland. Unpaid fines include court-ordered monetary penalties, offender recovery orders, and infringement notices. SPER is established under the State Penalties Enforcement Act 1999 (the SPER Act).

Initial plan to engage an external debt service manager

From May 2012 to May 2014, SPER established a program to reform its business model. The program initially included outsourcing some of SPER’s debt collection to a debt service manager who was also being engaged to provide the software for case management. The following timeline shows key events and decisions during that time.

Following a machinery of government change, SPER was transferred from the Department of Justice and Attorney-General (DJAG) to the Office of State Revenue (OSR), Queensland Treasury.

OSR identified a range of improvement opportunities to SPER’s systems, processes, policy, and legislation. SPER’s business model was unable to accommodate the increase in volume of debt lodgements and it was not meeting the government’s expectation for debt-recovery outcomes.

SPER prepared a business case for the reform of its business model, which the Queensland Government approved on 15 May 2014. Queensland Treasury formed a steering committee to govern the SPER program comprising the OSR Commissioner (as program sponsor), a Deputy Under Treasurer, and the Director of Treasury’s Fiscal Discipline and Reform Unit. The Under Treasurer at the time appointed the SPER Registrar as the program director.

The proposed service delivery model approved in the business case included outsourcing to a debt services manager (DSM). The DSM was to provide software as a service to SPER, manage the debt register, and manage the collection of a portion of the penalty debts referred to SPER via a panel of (private sector) debt collection agencies.

SPER agreed to a secondment arrangement with a consultancy firm to provide transaction manager services for the procurement process.

SPER engaged a consultancy firm as the implementation adviser on 8 September 2014 (this was the same consultancy firm it engaged in January 2014 to provide advice on options to implement a DSM model for the business case).

Change in direction to insourced debt collection

With the change of government in Queensland in January 2015, the proposed business model was revised to take account of the new administration’s preferred direction. This included the removal of private sector involvement in debt collection. The SPER Reform Program was changed to:

- transform the business to better manage debt collection in-house

- engage an ICT vendor to support the business transformation with software as a service (SaaS). In SPER’s case, the contract’s original intent was for the vendor to focus on configuring, rather than customising, its software for SPER’s needs. However, it did not end up being a standard SaaS engagement as SPER required the vendor to significantly customise the ICT solution (application).

Software as a service (SaaS). The Queensland Government Chief Information Office defines software as a service as:

The capability provided to the consumer is to use the provider’s applications running on a cloud infrastructure. The applications are accessible from various client devices through either a thin client interface, such as a web browser (for example, web-based email) or a program interface. The consumer does not manage or control the underlying cloud infrastructure including network, servers, operating systems, storage or even individual application capabilities, with the possible exception of limited user-specific application configuration settings.

The following timeline shows key events and decisions during that time.

Treasurer’s media release stated that the government does not support the use of private debt collectors.

Treasurer approved a recommendation from the Under Treasurer to adjust the scope of the existing request for proposal to exclude those components of the service delivery model no longer supported by government policy.

SPER completed an update of the business model.

SPER assessed the new model versus the DSM model and concluded that the procurement process could recommence with amended documentation. The procurement would now be for the SaaS component only, rather than a DSM model and SaaS.

SPER asked two of the original proponents to undertake a revise and confirm exercise where proposals previously submitted were revised to reflect the change in preferred business model.

On 24 November 2015, SPER selected its preferred vendor. On 14 March 2016, SPER and the successful vendor signed the contract.

Between March 2016 and May 2019, there were three significant contract variations, several letter agreements to allow work to progress while the contract was being renegotiated, and over 300 change requests.

Following disagreements between SPER and the vendor, SPER terminated the contract on 17 May 2019.

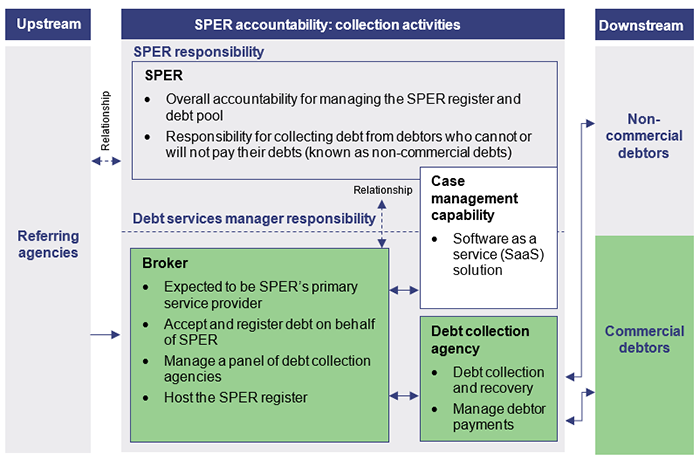

Figure A shows the allocation of responsibilities for the initial DSM model. The sections highlighted in green were originally to be outsourced, but after the change in government direction SPER retained these in-house. The SaaS solution for case management was to be provided by the vendor under both models.

Queensland Audit Office from SPER documentation.

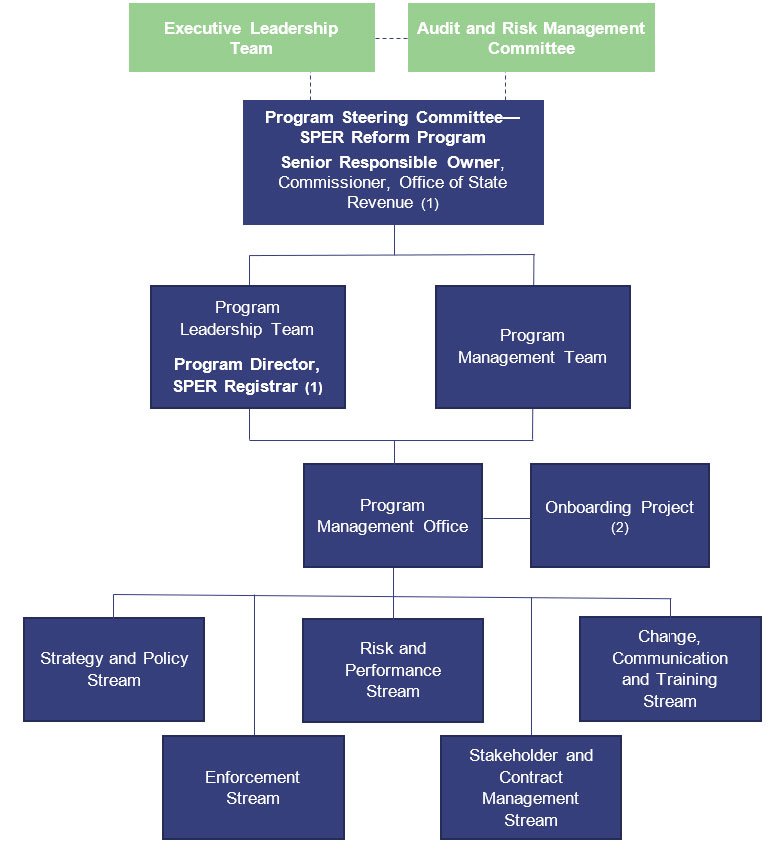

Figure B shows the governance arrangements SPER established for the program.

Note: 1) While the Office of State Revenue is led by a commissioner who is appointed independently of Queensland Treasury and the SPER Registrar is also a statutory officer, all staff in those areas are Queensland Treasury employees; 2) There was no ICT stream in the SPER Reform Program but there was an onboarding project. This was expected to act as the conduit between the SPER Reform Program and the vendor to ensure alignment between the two organisations. The SPER Registrar (who was also the Program Director) was the sponsor of the onboarding project and was supported by the implementation advisor. The program business case stated this project was to operate throughout the implementation, but it only operated for about three months in 2016. After this, the vendor reported directly to the program.

Queensland Audit Office from SPER documentation.

Key facts

| Unsupported system |

|

| Escalating debt |

|

| Value of the ICT vendor contract |

The total original contract value was $58.76 million, which included:

|

| Project duration |

|

| Contract changes |

Between March 2016 and May 2019, there were three significant contract variations, several letter agreements to allow work to progress while the contract was being renegotiated, and over 300 change requests. |

| Program cost |

The total SPER Reform Program cost (to 30 June 2019) was $76.8 million. This included $24.1 million for the business transformation and $52.7 for ICT solutions. The ICT component cost included:

|

| Project monitoring and assurance |

The project conducted at least 20 reviews (gateway reviews, program health checks, and various other reviews) using 11 providers from June 2014 to July 2019. The ICT dashboard summary for the SPER project shows that the project was in amber for 860 days of the 1,015 days it was being reported on. |

Why we performed the audit

On 25 March 2019, Mr Frankie Carroll, Under Treasurer, referred concerns to the Auditor‑General about the delivery of the ICT component of the SPER Reform Program.

In summary, these concerns related to the effectiveness of the management and administration of the ICT component of the SPER Reform Program by SPER, including the:

- timeliness in delivering the ICT solution

- clarity of the strategy for the ICT solution

- adequacy of oversight of testing, risk, and contract management

- reliability of project reporting.

The Auditor-General agreed to conduct a performance audit of the effectiveness of the governance of SPER’s delivery of the ICT component of the SPER Reform Program.

How we performed the audit

The objective of the audit was to assess whether the ICT component of the SPER Reform Program was governed effectively.

We assessed whether SPER:

- established an effective project board and governance processes to provide oversight of the SPER Reform Program

- applied appropriate procurement processes for the SPER ICT solution and effectively managed the contract with the successful vendor

- used an approved Queensland Government project methodology to effectively manage and administer the ICT component of the SPER Reform Program.

We conducted interviews and reviewed key documents. We spoke with current and former staff and senior officers from SPER and Queensland Treasury, key consultants SPER used on the program, and staff from the Queensland Government Chief Information Office.

Appendix C contains further details about the audit objectives and our methods.

Scope exclusions

We did not, as part of this audit, examine the effectiveness of:

- activities conducted by the vendor for the SPER Reform Program

- the business transformation component of the SPER Reform Program

- SPER’s decision to terminate its contract with the vendor (following a dispute resolution process between SPER and the vendor, the dispute has been settled to the satisfaction of both parties, the terms of which are confidential).

Queensland Government Chief Information Office

During this audit, the role and function of the Queensland Government Chief Information Office (QGCIO) was revised and restructured within the Department of Housing and Public Works (DHPW). Our references in this report to the QGCIO refer to the former QGCIO, which operated at the time of the SPER ICT Reform. There is now an Office of Assurance and Investment, which reports to the Chief Customer and Digital Officer within the Department of Housing and Public Works.

Summary of audit findings

Procuring the services

Governing the procurement process

While SPER involved resources with the appropriate skills and experience to manage the debt collection aspect of the initial model, the effectiveness of SPER's oversight of the SPER Reform Program’s procurement process was adversely impacted by:

- weaknesses in the design of the steering committee. The chair of the steering committee also chaired the tender evaluation panel, which had the potential to compromise the independence and objectivity of the steering committee to challenge the process

- over-use of external consultants and contractors. SPER’s actions demonstrated that it outsourced the procurement process. Most of the work for the procurement process was undertaken by contracted-in individuals and external agencies appointed by SPER as experts. This created a risk that the vendor’s solution would not meet SPER’s needs as there were limited staff with in-depth SPER knowledge involved in the procurement process and product assessment

- inadequate ICT skills and experience to effectively manage the ICT component of the procurement process. SPER’s implementation advisor had the skills and experience to support SPER with the debt collection aspect, but not the ICT component of the procurement process. Under both models (SaaS plus outsourced debt collection, and SaaS plus insourced debt collection), SPER did not place enough emphasis on ensuring it had the right skills to manage the ICT component.

In relation to legal advice, Queensland Treasury’s legal division was not involved in the program because it did not have the necessary experience to assist with the contract for this program. Therefore, SPER engaged its own legal advisor to develop the contract. Queensland Treasury’s legal team did not provide support to the project until early 2019, when it became involved in the decision/process to terminate the contract.

Defining requirements

The government’s approach to procurement at the time (2014) was to go to market with high‑level objectives and let the market bring innovative/best practice approaches to it. SPER management advised us that they were relying on the vendor telling them what good practice looked like, rather than SPER needing to define exactly what it needed. Therefore, detailed functional requirements were not created from the start.

As such, SPER defined its original requirements predominantly as outcomes, which were supported by minimum system requirements. SPER missed an opportunity to properly define its requirements when there was a change in government direction. SPER assessed that it only needed small changes to the original requirements to accommodate the government’s outsourcing policy change, and that the procurement process could continue. In an outsourced model, outcomes required are defined and the delivery model is left for the vendor to determine how best to deliver the outcomes to the customer. However, SPER found the vendor’s product required considerable customisation to meet its needs.

When evaluating the process at the time of the change in government policy, the SPER team believed the ICT application currently being used to support the SPER debt management process could fail at any moment. SPER’s focus was on replacing the application at the earliest opportunity. It appears that SPER did briefly consider returning to the market but decided not to for four reasons: 1) it was concerned about the stability of its existing system, 2) it had already made significant progress in the procurement process, 3) it believed the objectives for the procurement were substantially the same, and 4) it believed it was unlikely there was another specialist provider of government debt management systems and services with specific penalty debt management experience in the market (based on its initial market research when it went to market for a debt service manager).

Assessing the vendor and the vendor’s product

SPER procured a product without performing a detailed assessment of the product’s suitability to meet its needs. SPER’s procurement team did not adequately assess the vendor’s capability or conduct due diligence on the vendor’s product during the procurement process.

SPER’s ability to assess the vendor’s product was constrained by the fact that it did not begin to define its requirements in sufficient detail until about 15 months after it signed the contract when it conducted a business process mapping exercise. Nor did SPER ensure the vendor’s product met its legislative or operational requirements. SPER did not consider whether the vendor’s product implementation in the United States of America was similar to its own requirements, and it did not conduct any site visits to see first-hand how the vendor’s product worked.

SPER did not properly assess whether its preferred vendor had demonstrable experience in delivering its product under a SaaS model. All reference checks undertaken during procurement were done over the phone. According to a technical review of the program conducted in June 2017, SPER’s vendor had just one client in the world using its solution through a SaaS model.

The vendor’s international sales team were involved in the procurement process but were not the same as the delivery team. SPER found the vendor’s local delivery team did not have a good understanding of the vendor’s product, as it had not yet been implemented anywhere in Australia. SPER did not assess the capability or conduct reference checks on the vendor’s local team, who it had to work with during the project.

Defining the contract deliverables

SPER specified outcomes and minimum system requirements in the contract from the project’s onset, and this did not change after the government direction changed. This type of contract did not work well in the circumstances as the software had to be extensively tailored to meet SPER’s specific business requirements (the original intent of the contract was for the vendor to focus on configuring, rather than customising, its product). SPER defined the operating model late as it expected the vendor to share its better practice operating model and, according to SPER, the vendor did not.

It appears that because SPER was concerned the existing system would fail, it was not willing to take the time to determine what it needed from the vendor to successfully implement the new model.

It is clear from the significant variations to the contract, and from many change requests SPER submitted to the vendor, that SPER and the vendor had different expectations about what services and deliverables were required. From the vendor’s perspective, SPER did not fully leverage the capabilities of its product and modify its business processes to work with its product. From SPER’s perspective, it found the product did not meet its requirements.

Governing the project

Composition of the program steering committee

Queensland Treasury set up the SPER program steering committee to govern the business transformation activities, but it did not adequately consider how best to govern the ICT component. The steering committee members lacked the skills to govern the delivery of the program’s ICT component and placed too much reliance on its implementation advisor to bring the ICT expertise. We observed several weaknesses, which compromised the steering committee’s ability to effectively govern the program’s ICT component:

- There were only two decision-making members in the steering committee for most of the project. There were not enough people to challenge decisions or provide independent advice.

- Project governance was not separate from organisational governance. Steering committee appointments were made based on their roles in Queensland Treasury rather than the skills and experience required to make the project a success.

- The steering committee did not establish a specialist sub-committee for the SPER ICT component of the program or include additional decision-making members in the steering committee who could specialise in the ICT component.

- The steering committee operated autonomously for most of the project, but did provide verbal updates to the Under Treasurer.

- The steering committee membership did not include representation from Queensland Treasury ICT or specialist ICT resources.

- Until November 2018, the steering committee did not include a representative from the Queensland Government Chief Information Office (QGCIO), which would have been appropriate given the skills gap the steering committee had. It should be noted that QGCIO is an advisor to Queensland government agencies on ICT-related projects and is not required to be on steering committees.

- Steering committee members did not have any prior experience in executing a project of this size, type, and complexity, and were highly dependent on recommendations made by external advisors.

- There were no independent members on the steering committee. All members had a direct stake in the program. The project lacked a critical friend who could independently and objectively challenge decisions being made.

- There was no evidence of regular written communication and updates between the steering committee and the Under Treasurer. The Under Treasurers (multiple during this project) were not involved in the key decision-making processes and were involved only for approvals, where required.

The operations of the program steering committee

The steering committee did not adopt a specific reporting framework because it did not adopt a project management methodology from the start. As a result, it was left to the best judgement of the contractors and consultants as to what was reportable and what was not.

The program steering committee was highly reliant on the decision-making advice and information provided by consultants and contractors because of its skills gaps. Because the meeting minutes mainly recorded outcomes, it is unclear whether, or how effectively, the committee evaluated the information provided or confirmed the adequacy of the consultants’ and contractors’ work.

The minutes of the steering committee show there was minimal reporting on the status of the ICT component from the project’s initial phase until the end of 2017 when status reporting became more consistent and formally documented.

The project operated as a silo within Queensland Treasury, but it did provide verbal updates to the Under Treasurer. The operating style at the time of this project was for the Commissioner of State Revenue and the SPER Registrar to operate with a fair degree of autonomy. Their respective statutory officers’ responsibilities are defined in legislation and they can execute their statutory functions independent of the Under Treasurer.

However, for a significant business-critical ICT project, it is important for Queensland Treasury and OSR to work together effectively. But we found they did not work together effectively on this project as demonstrated by the following different perspectives they provided us with during this audit:

- The then Under Treasurer (May 2015 to September 2018) believed the project operated independently and not in a cooperative manner with Queensland Treasury.

- The OSR and SPER statutory officers believed the then Under Treasurer was not engaged in the project because he only required verbal updates.

Monitoring and managing project risks

The program steering committee underplayed some key risks during the project, such as the risk of project failure and the vendor’s potential lack of capability to meet its needs. The steering committee’s consistent advice to the Under Treasurer was that the project had issues, but these will be resolved. The steering committee lacked the objectivity to question its previous decisions because the committee’s members were also responsible for selecting the vendor, overseeing the contract negotiations with the vendor, and managing the vendor during implementation.

Other weaknesses we observed with how the project managed risk were:

- the project did not define the method and classification of risks until 2018

- the steering committee and project team relied on assurance providers to raise risks and identify mitigation strategies when they performed their various reviews

- there was no process for escalating risks to other parts of Queensland Treasury executive or governing bodies.

Project assurance

SPER conducted at least 20 reviews (gateway reviews, program health checks and various other reviews) from June 2014 until the vendor contract was terminated in May 2019. But these activities were ineffective in preventing the project from failing because:

- the scope of some of the reviews (in particular, the program health checks) was constrained as they did not include reviewing the activities the vendor performed

- SPER did not address warning signs raised through some of these reviews—including concerns with the lack of an operating model, the vendor’s product, and SPER’s relationship with the vendor

- the reviews’ results were not shared outside the steering committee to keep those members accountable.

The gateway review process was not used effectively to highlight the contract risks. The project used a predominantly outcomes-based contract (with minimum system requirements) for a SaaS model where customisation, rather than just configuration, was required in the end to meet SPER’s needs. Before signing the contract (March 2016), SPER did not complete the future state operating model that the gateway review stated should be completed before contract execution.

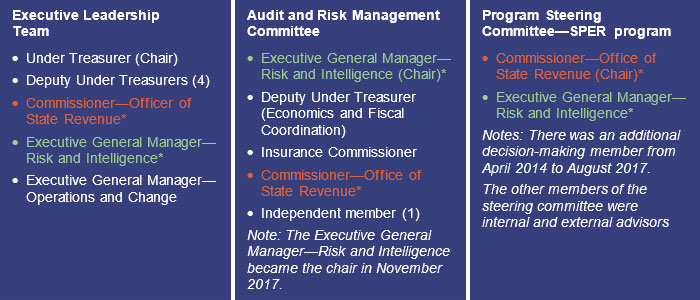

There was a conflict of interest that was not identified and managed—two members of the SPER program steering committee were also members of Queensland Treasury’s Executive Leadership Team and Audit and Risk Management Committee, as shown in Figure C. This compromised the independence of reporting to Queensland Treasury’s governance committees. There was also only one independent member across the three governing committees. The role of the Audit and Risk Management Committee in reviewing project risk was also unclear during the project.

Note: * Members of all three committees.

Queensland Audit Office.

Queensland Government digital projects dashboard

The SPER team provided status reports to the QGCIO for periodic updating of the digital projects dashboard. However, we were unable to validate the accuracy of SPER’s updates to the QGCIO because there was no comparable information in the program documentation.

We found similar issues in this audit to what we reported in Report No. 1 2018–19 Monitoring and managing ICT projects with regards to the digital projects dashboard (which was then named the QGCIO ICT dashboard). In particular:

- SPER’s explanatory notes only included detailed information from October 2017. Until then, there was limited detail reported even though the project was being reported as amber from November 2016.

- SPER’s explanatory notes did not include deliverables and outputs achieved.

- The SPER Reform Program end date changed four times. The status update only showed what had last changed. It did not highlight what the risks were for SPER with the ongoing delays.

The SPER Reform Program was reported on the digital projects dashboard in green until the second update in July 2016. From November 2016, it moved to amber, where it remained until March 2019 when it turned red. SPER made eight updates to the dashboard for the project between June 2016 and March 2019, when the project was removed from the dashboard. The dashboard summary for the SPER project shows that the project was amber for 860 days of the 1,015 days it was reported on.

The SPER Reform Program is no longer reported on the digital projects dashboard because projects are removed in the next publishing cycle following their closure. As a result, there is a lack of transparency of information about projects that end prematurely. There were no explanatory notes provided on the reasons for the project closure and how the project costs have been accounted for.

Assessing the option to terminate the contract

We found there was no appetite to consider contract termination until a new Under Treasurer was appointed in February 2019 who became more involved in examining the project. There were warning signs:

- As early as October 2016, a project assurance report stated ‘Successful delivery of the initiative is in doubt with major risks or issues apparent in a number of key areas. Urgent action is needed to ensure that these are addressed, to decide whether resolution is feasible.’

- In April 2017, a consultant’s technical review advised SPER officers to consider contract termination. However, while the SPER Reform Program steering committee discussed an early version of the consultant’s report that was presented to it in a steering committee meeting, there is no evidence of how it considered the issues raised. Subsequent versions of the consultant’s report were not tabled or presented at the steering committee and there is no evidence the then Under Treasurer was briefed on the findings. Senior officers of the program reported to us that the program did not agree with the consultant’s observations and therefore did not explore further the options to terminate the contract at that time.

There was a culture within the Office of State Revenue of being overly optimistic that the issues would be worked out. This attitude was costly to the project outcome because it meant the project continued to incur costs over a two-year period that ultimately delivered no value to SPER given the contract termination. SPER’s implementation advisor conducted an analysis of commercial implications associated with any termination of the contract in May 2017, which confirms that it would have been costly to terminate the contract without identifying a specific cause to terminate (this is known as termination for convenience). This is why SPER should have conducted due diligence on the vendor’s product up-front before entering into a long-term contract with the vendor.

Managing contractor performance

The contract that SPER signed with the ICT vendor did not effectively support the different stages of the project—an ICT solution that was highly customised before entering an operational support arrangement—nor did it contain sufficiently detailed specifications to enable effective contract management.

Without enough details in the contract for the implementation phase, SPER had limited power to measure and assess the vendor’s performance and take timely action where their performance was deemed to be unsatisfactory. Identifying a right to terminate the contract was difficult as unsatisfactory vendor performance was difficult to establish.

We found SPER’s contract management during the system-implementation phase was ineffective because:

- the contract management plan did not cover the ICT build component

- detailed contract deliverables were not clearly defined, as evidenced by over 300 change requests and three significant contract variations during the project

- performance indicators in the contract were targeted at the post-implementation stage but did not cover contract performance during the system-implementation phase

- the contract required significant variations and resulted in SPER accepting a reduced scope of deliverables for a higher cost

- not all meetings between SPER and the vendor were formally documented.

Managing contract variations

There was conflict between SPER and the vendor over the changes SPER requested during the project:

- From SPER’s perspective, the vendor did not accept that many of its requests for changes related to basic system functionality, which it expected the vendor to deliver within the existing contract.

- From the vendor’s perspective, the project timelines were compromised because SPER could not control the scope of the project and kept changing the project requirements.

SPER representatives advised us that the vendor insisted on change request forms being submitted for all changes even though, in SPER’s view, not all changes related to actual scope changes. From SPER's perspective, this put pressure on what was considered in or out of the original project scope and, over time, resulted in the vendor’s deliverables being reduced while the project cost increased. The lack of a common understanding between SPER and the vendor is the adverse consequence of the project scope and specific ICT system requirements being poorly defined.

Making contract payments

The initial contract called for 15 payments during the implementation phase, based on meeting milestones throughout the project.

After making payments of $6.6 million to the vendor for the first seven milestones, SPER and the vendor agreed to move to a time and materials basis while they renegotiated contract deliverables. They needed to do this because it became apparent to SPER that the vendor’s solution did not provide all the elements SPER expected. They used a time and materials basis for payments so they could continue to work while they agreed new contract terms. SPER expected the time and materials phase to take less than two months and to cost under $1.9 million. But it took 10 months (which includes a caretaker period for the 2017 state election)—from February 2017 to December 2017—for the contract to be renegotiated, with a time and materials cost of $8.35 million.

The contract variations increased the vendor’s revenue from the project, with an additional $10.3 million in fees for the changes. SPER ended up without an ICT system because it terminated the contract and the vendor retained the software.

Managing systems integration

SPER engaged the vendor to provide a wholistic ICT application to support SPER’s case management requirements. However, during the implementation, SPER identified that the vendor’s system was unable to deliver certain components. Therefore, it had to look at alternative ICT vendors for these components, which included business intelligence, single view customer platform, and general ledger accounting.

As SPER de-scoped elements from the vendor’s contract and brought in other vendors, it created the need for a systems integrator to ensure there was effective coordination of all the ICT components being developed. We were advised that SPER considered appointing a systems integrator but decided not to because of concerns with cost and because it concluded that the other vendors’ integration points were marginal.

SPER did not have a clear plan on how to integrate all the various components. SPER tested individual ICT components as stand-alone because the vendor’s SaaS solution was not available for performing system integration testing.

This led to a conflict with the vendor late in the project. The vendor felt that, because SPER did not perform or appoint a systems integrator, there was a lack of coordination and design gaps were identified late in the development phase, which required additional time and cost to remediate. A contract variation in November 2018 stated that one of the planning assumptions was that SPER is the system integrator and will coordinate overarching design and testing across all affected third parties.

Audit conclusions

Despite the efforts of the senior public servants involved in the SPER Reform Program (including the ICT component) and the application of many procurement, project management and assurance practices, the governance over this program was not effective from inception.

The SPER Reform Program had its genesis in the times of large projects involving government outsourcing services for the market to deliver using new and innovative approaches. It is important to note that, while the policy setting changed during the project’s life cycle, the original thinking associated with the outsourced model underpinned many aspects of the governance and project management approaches. These approaches were not suitable for the nature of the program the government moved forward with.

This program suffered from a culture within SPER and the Office of State Revenue that involved operating in a silo and being overly optimistic—SPER was always optimistic that it could manage its way out of the challenges it faced. SPER did not share its challenges widely enough to gather sufficient input and advice from other parts of Queensland Treasury or the Queensland Government. Despite the warnings SPER received from many reviews, it was confident the project would succeed.

SPER’s inexperience in projects of this nature and its unfounded optimism led to the following weaknesses in governance:

- insufficient guidance and direction regarding business requirements for the software

- inadequate separation of governance for procurement to project management to managing the entity

- inadequate identification, formal documentation and mitigation of project risks

- inadequate procurement evaluation and due diligence on a vendor with which the government signed a long-term contract

- insufficient ICT skills for a project that involved tailoring software and integrating software solutions

- overreliance on external experts and not engaging external experts with relevant ICT skills to support the program. SPER’s spend on its own resources for this project was very low compared to its consultant and contractor spend. Its internal resources had limited capacity to focus on the project because of their business-as-usual responsibilities

- lack of consideration and timely actions to follow up issues in assurance reviews, for example considering termination earlier

- ineffective management of contractor performance.

The many failings of this project provide valuable lessons for the future.

Lessons learned

Software as a service contracts

Where SaaS contracts lock entities into long-term relationships, thorough due diligence of the vendor and their product is required. Entities should not use an outcomes basis as an excuse for not defining detailed project requirements appropriately, particularly if tailoring software is required. If entities have not seen the product working in action, they need to arrange site visits and see the product working first-hand. Entities need to be confident that the vendor’s product meets their needs and that vendors can work well with them.

Defining contract deliverables

Not defining the contract deliverables sufficiently up front is costly. When this happens, the vendor’s and entity’s expectations may be misaligned, which may result in many change requests and significant contract variations, which cost time and money.

Reliance on consultants and contractors

Over reliance on consultants and contractors can result in a lack of business understanding when requirements are defined for ICT projects. When an entity lacks the expertise it needs for a major ICT project, it should engage a ‘critical friend’ who is independent of the delivery team and can provide objective and independent advice to the project steering committee on risks.

Limited capacity of internal staff to work on transformation projects

Involvement of staff with detailed knowledge of an entity’s business operations is important for transformational projects. But if staff need to continue their business-as-usual responsibilities during this time, it limits their capacity to be involved in the project and manage risks. Entities should consider freeing internal staff involved in transformational projects from their business‑as-usual responsibilities by delegating and assigning their responsibilities to others.

Stop and rethink

Projects should not push ahead when major changes, such as government policy position changes, will impact on projects. Entities should take the opportunity to pause, assess risks, and fully reconsider before moving forward.

Contracts

Entities need to be careful that they do not commit to long-term software development and support contracts that make it hard for them to terminate when things go wrong. Entities should be confident the product works well before they commit to service agreements. Contracts should allow the entity to conduct assurance activities over the vendor during implementation and incorporate this into the project assurance.

Organisational culture

An organisation’s culture can inhibit project governance effectiveness when the entity operates in silos and when bad news is not communicated. Stopping a project before it incurs unnecessary costs is better than stopping it when significant money has already been spent.

Big-bang projects

For critical business transformation projects, trying to do everything at once is high risk. Implementing changes in segments provides more opportunity to review, learn and assess risk.

Project steering committees

Project steering committees for major ICT projects should include representation from internal ICT areas and the newly created Office of Assurance and Investment (formerly part of the Queensland Government Chief Information Office).

When steering committee members are part of the governance group for a long time and there are no members of the committee who are independent of the entity, they will find it hard to question decisions they have previously made. If entities are highly dependent on external consultants, they should engage an independent expert who can act as a critical friend and challenge the decisions being made.

Statutory officers’ roles

Statutory officers have responsibilities defined for them in legislation, which gives them independence from the chief executive officers in the entities they serve in when executing defined statutory officer responsibilities. But in addition to these, they also have management responsibilities (like delivering projects). It is important that statutory officers and chief executives work collaboratively to ensure effective delivery of major projects.

Recommendations

Department of Housing and Public Works

We recommend that the Department of Housing and Public Works:

1. develops and implements a guideline to assist entities in establishing digital and ICT contracts (including software as a service contracts)

This should include guidance on:

- minimum vendor and product due diligence

- clear contract milestones, break points, and pause options to ‘stop and rethink’

- minimum contract management requirements during implementation (including reviewing vendor performance) and post ‘go-live’ (Chapters 1 and 2).

2. works together with the Public Service Commission on strategies to upskill staff within the public service in delivering and governing ICT projects (Chapters 1 and 2)

3. works together with Queensland Treasury and the Department of the Premier and Cabinet to ensure that major ICT projects are established with appropriate governance arrangements before vendors are engaged

Project steering committees should:

- be staffed with appropriate skills and experience

- include whole-of-government representation where appropriate

- include members who are independent of the entity

- contribute to decisions about minimum assurance activities

- integrate effectively with an entities’ other governance groups and avoid duplication of membership across governance groups

- understand the risks and benefits of alternative approaches to project delivery—iterative/agile versus large scale transformation and how to contract appropriately (Chapters 1 and 2).

4. revises its investment review and project assurance guidance to:

- ensure project steering committee members understand that they are empowered to stop projects and rethink their position at every stage

- enhance the availability of reporting of historic recommendations and lessons learned (Chapter 2)

5. improves transparency of major ICT projects by requiring all departments to publish data on the digital projects dashboard, and a more detailed report to the Office of Assurance and Investment, for projects that end prematurely.

At a minimum, the data to be published on the digital project dashboard should include the following information about the project:

- project and department name

- investment objectives

- date the project started, key milestones, and significant project journey events such as scope change, cost re-evaluation and delivery delay events

- reasons explaining why the project ended prematurely.

The report to the Office of Assurance and Investment should also include at a minimum:

- lessons learned

- the impact of not achieving the intended investment objectives within the originally stated time frames

- total costs incurred, broken down by sunk, capitalised and operational costs

- benefits achieved while the project was in-flight and whether the department will use some of the project deliverables (Chapter 2).

Queensland Treasury

We recommend that Queensland Treasury:

6. updates its Audit Committee Guidelines—Improving Accountability and Performance for departments and statutory bodies to ensure audit committees are required to monitor and receive reports from management on risks for major ICT projects (Chapter 2)

7. updates its own audit and risk management committee charter to ensure the committee monitors risks on Queensland Treasury’s ICT projects, and reports its monitoring activities to Queensland Treasury’s Executive Leadership Team (Chapter 2)

8. reviews its governance structure to:

- avoid conflicts of interest through duplicate memberships

- clarify the difference for its statutory officers between their legislative and management responsibilities

- ensure it has an appropriate mix of skills on its governance committees (Chapter 2).

Reference to comments

In accordance with s.64 of the Auditor-General Act 2009, we provided a copy of this report to relevant agencies. In reaching our conclusions, we considered their views and represented them to the extent we deemed relevant and warranted. Any formal responses from the agencies are at Appendix A.

1. Procuring the services

This chapter is about how effectively the State Penalties Enforcement Registry (SPER) managed the procurement process.

Introduction

From May 2012 to May 2014, the Office of State Revenue (OSR) established a program to reform SPER. The SPER Reform Program included an information and communication technology (ICT) component that was initially to be predominantly outsourced to a debt service manager (DSM). The DSM was to provide software as a service to SPER, manage the debt register, and manage some penalty debt collection (collecting a portion of the penalty debts referred to SPER, excluding non-commercial debt, via a panel of private sector debt collection agencies).

In May 2015, SPER was required to stop the procurement process it began in July 2014 because of a change in government policy. Following a change in government, the Treasurer announced that an outsourced DSM was no longer the preferred model. SPER recommenced the procurement process under a new model, retaining the software as a service (SaaS) component but excluding debt collection (which was to be insourced). SPER awarded the contract to the successful vendor on 14 March 2016.

Between March 2016 and May 2019, there were three significant contract variations, several letter agreements to allow work to progress while the contract was being renegotiated, and over 300 change requests. Following disagreements between SPER and the vendor, SPER terminated the contract on 17 May 2019.

To assess the effectiveness of the SPER Reform Program (ICT component) procurement process, we examined whether SPER:

- effectively governed the procurement process

- clearly defined its requirements

- thoroughly assessed the capability and suitability of the product to meet its needs

- established an appropriate contract.

Governing the procurement process

In December 2013, Queensland Treasury established the SPER Reform Program and set up a steering committee to govern the procurement process and oversee the transformation. The steering committee continued for the duration of the project, although there were changes in membership over time.

SPER recognised that it needed external expertise to undertake a procurement of this nature. While SPER introduced appropriate skills and experience to manage the procurement process for the debt collection aspect of the model, the effectiveness of SPER's oversight was adversely impacted by:

- weaknesses in the independence and objectivity of the steering committee. The chair of the steering committee also chaired the evaluation panel, which compromised the independence and objectivity of the steering committee to challenge the process

- over-reliance on external consultants and contractors. SPER outsourced the procurement process to consultants and contractors, and did not sufficiently confirm the quality of their performance. For example, SPER did not critically review the adequacy of their procurement analysis. Limited staff with in-depth SPER knowledge were involved in the procurement process and product assessment

- inadequate ICT skills and experience to effectively manage the ICT component of the procurement process. SPER’s implementation advisor had the skills and experience to support SPER with the debt collection aspect, but not the ICT component of the procurement process. The ICT component was a key part of both models—of the SaaS with DSM model before the change in government, and of the SaaS-only model (with insourced debt collection) after the change in government. Under both models, SPER did not place enough emphasis on ensuring it had the right skills to manage the ICT component.

SPER retained overall control of the procurement through chairing the program steering committee and chairing the various evaluation panels throughout the process. However, most of the work was undertaken by contracted-in individuals and external agencies appointed by SPER as experts. This included:

- an implementation advisory firm

- a legal advisory firm

- a transaction manager

- a probity adviser.

This use of expert contractors created a risk that the procurement outcome (that is, the selected vendor) would not align well with SPER’s needs because the personnel involved in the procurement process had insufficient business knowledge of what SPER required the ICT system to do.

In relation to legal advice, Queensland Treasury’s legal division was not involved in the program because it did not have the necessary experience to assist with the contract for this program. Therefore, SPER engaged its own legal advisor to develop the contract. Queensland Treasury’s legal team did not provide support to the project until early 2019, when it became involved in the decision/process to terminate the contract.

Defining requirements

The initial procurement process to identify a vendor for the DSM model took almost a year and, from a process perspective, followed all required Queensland government procurement guidelines. After the change in government direction, the SPER team wanted to continue its procurement process rather than spend more time identifying and agreeing terms with a new vendor. SPER had made significant progress before the change in government policy.

The SPER team had serious concerns that the existing debt service management system could fail at any moment (due to its use of an old system, out of vendor support). It appears SPER did briefly consider returning to the market but decided not to for four reasons: 1) it was concerned about the stability of its existing system, 2) it had already made significant progress in the procurement process, 3) it believed the objectives for the procurement were substantially the same, and 4) it believed it was unlikely there was another specialist provider of government debt management systems and services with specific penalty debt management experience in the market (based on its initial market research when it went to market for a debt service manager).

We found that the high-level procurement objectives were defined, and that the contract

(March 2016) included minimum system requirements, but SPER did not clarify its detailed functional requirements until late 2017 when it finally conducted a business process mapping exercise. SPER did not properly define its future operating model, nor assess its prospective vendor’s capabilities from the start (under the SaaS and DSM model), and this carried through to the subsequent procurement process (SaaS only) after the change in government.

At the time, the government’s approach to procurement was to go to market with high-level, outcomes-based objectives and let the market propose innovative/best practice approaches. SPER management advised us that, under this approach, they were relying on the vendor’s advice about what good practice looked like, rather than SPER needing to define exactly what they needed. Therefore, detailed functional requirements were not created from the start.

Initial procurement process—2012 to 2014

Expression of interest and request for proposal

The initial procurement process was well structured and consistent with what would be expected for an outsourcing procurement process. The background documentation provided to the market for the expression of interest and request for proposal was extensive and covered both the existing SPER systems and the outcomes sought. However, SPER did not define detailed system specifications.

We found the initial procurement process to select a vendor under the SaaS and DSM model:

- defined the scope of the services except for the ICT component for both the expression of interest and request for proposal

- involved appropriate consultation with key stakeholders who would be affected by changes to SPER's systems. SPER consulted with several agencies who would be affected by changes to the SPER ICT systems, especially entities that refer unpaid fines to SPER for collection

- included extensive market analysis to test the feasibility of a DSM model and to determine the depth of a suitable market to provide a competitive procurement process. As part of the development of the 2014 business case, SPER conducted a market sounding exercise by issuing a request for information. Thirteen entities responded to this, confirming to SPER that there was sufficient market interest to support a DSM model and sufficient depth to support a competitive tendering process.

The prospective proponents to the procurement were able to seek clarification from SPER about any points of uncertainty and were invited to attend workshops to further specify and clarify requirements and expectations. Proponents had access to a virtual data room for additional information.

Experts involved in the evaluation produced detailed reports of their observations, findings, and conclusions. Submissions from two proponents were assessed for value for money against a public sector comparator previously developed. The conclusions and recommendations of the evaluation panels were well documented.

The whole process was subject to oversight by an independent probity adviser who did not raise any concerns in his final report.

Recommencement of the procurement process

When the government direction for engaging an outsourced DSM model changed, SPER’s analysis indicated that removing references to a DSM would not significantly change the existing documentation. SPER considered it was appropriate to continue the existing process. Based on initial market research and responses to the expression of interest, SPER considered it was unlikely that another specialised provider existed in the market.

As SPER was almost two years into the procurement process and did not consider the change significant, it did not go back to the market when the government’s direction changed. The revised process with an amended scope took about two months (29 August 2015 to 30 October 2015, when bids were submitted). Under the new model, SPER required a provider to deliver a SaaS solution tailored to its needs. SPER did not properly assess whether its preferred vendor had demonstrable experience in delivering its product under a SaaS model. SPER selected the vendor based on its experience in delivering outsourced revenue collection services. According to a technical review of the program conducted in June 2017, SPER’s vendor had just one client in the world using its solution through a SaaS model.

The critical business need for SPER was to implement a new technology solution as soon as possible. SPER was concerned that any further delay caused by commencing a new procurement process would increase the risk of legacy system failure and require an interim system solution to mitigate this risk.

SPER asked two of the vendors from the initial procurement process to submit revised submissions, taking into account the changes in the model. These were re-evaluated by SPER’s implementation advisor.

Amendments made to the documentation to reflect the new model removed references to a DSM but otherwise remained substantially unchanged. More than 90 per cent of the original service requirements were the same, and no new requirements emerged from the definition of the new model. SPER extended the implementation advisor’s contract by $2.15 million, so the advisor could help SPER complete the procurement process and continue in the implementation advisor role until 30 June 2016 (through subsequent contract variations, the implementation advisor worked on the program until December 2017).

While SPER assessed that removing the outsourced DSM model did not fundamentally change the procurement objective, its analysis does not appear to have considered the impact on the contract’s expected deliverables—that is, tailoring a system for SPER to use in collecting debt versus predominantly outsourcing to a vendor to provide a debt collection service.

SPER and the successful vendor signed the contract on 14 March 2016.

Assessing the vendor and the vendor’s product

SPER procured a product without performing a detailed assessment of the product’s suitability to meet its needs and it did not have a good working relationship with the vendor’s team. SPER’s procurement team did not adequately assess the vendor’s capability or conduct due diligence on the vendor’s product. SPER management also reported that its requirements were not met because the vendor’s sales team promises were not being met.

SPER’s ability to assess the vendor was constrained by the fact that it did not define its detailed functional requirements because the contract was outcomes-based (with minimum system requirements). In addition, SPER did not ensure the vendor’s product met its legislative and operational requirements. For example, SPER only identified during the project that there was a mismatch of assumptions regarding whether all of SPER’s data would be converted, and that the vendor’s base product did not enable SPER to make payments to victims of crime, which it is required to do under the State Penalties Enforcement Act 1999. SPER did not do sufficient due diligence on the vendor’s product.

The vendor’s international sales team were involved in the procurement process, but were not the same as the delivery team. SPER found the vendor’s local delivery team did not have a good understanding of the vendor’s baseline product functionality, as it had not yet implemented it anywhere in Australia. The vendor adapted its team to include specialists from the United States of America because SPER required a high level of product customisation, rather than configuration, as was envisioned in the contract. The vendor’s debt management product had been implemented in the United States of America and Canada. SPER did not assess capability or conduct reference checks on the vendor’s local team, which was responsible for project delivery.

We observed several weaknesses with the depth of procurement evaluation. The evaluation panel gave the vendor high scores for some requirements based on its understanding of the vendor’s representations, which later fell well short of expectations. More due diligence on the vendor’s product and representations during the procurement process may have brought some of these issues to light earlier. For example, SPER did not consider whether the vendor’s product implementation in the United States of America was similar to its own requirements, and it did not conduct any site visits to see first-hand how the vendor’s product worked. All reference checks undertaken during procurement were done over the phone.

Figure 1A shows our observations of the weaknesses with SPER’s procurement evaluation in November 2015 when it confirmed its preferred vendor to deliver the SaaS solution.

| Criteria (procurement team evaluation)* | Key factors in evaluation | Panel’s score | QAO comments |

|---|---|---|---|

|

Case management system (acceptable/ outstanding) |

Demonstrates strong understanding of government debt collection (over 30 years’ experience). Includes an integrated general ledger. |

10 |

The 30 years’ experience comment does not include consideration of whether the vendor:

SPER found during implementation that the vendor’s product did not have the capability to perform financial management, and then engaged an alternative vendor to provide a financial accounting solution. |

|

Analytics and advanced reporting system (acceptable) |

Comes with a full suite of ready-to-use reports, dashboards. |

8 |

There were multiple instances during the project where reports had to be developed and SPER was charged separately for these as change requests. SPER de-scoped business intelligence from the vendor’s contract during implementation and engaged another vendor for this work. |

|

Solution services^ (acceptable) |

Highly automated and configurable solution which minimises manual input from SPER resources. |

10 |

Each configuration change that SPER requested, but the vendor did not agree was in-scope, cost SPER more in change requests. Significant SPER resources were required to address configuration issues with the vendor’s product. |

|

Relationship management (outstanding) |

Vendor has a Brisbane base and its headcount has more than tripled in the last three years. Vendor has highlighted a broad range of successful local engagements. |

10 |

The evaluation did not consider:

|

Note: * Legend:

- Outstanding: The response provides a high level of confidence that the relevant service requirements will be met by the proposed solution. The response demonstrates a good understanding of the service requirements and sets out a realistic and clear approach for meeting them. The evaluation panel gave a score of 9–10 for criteria evaluated by the procurement advisor as outstanding.

- Acceptable: The response provided by the vendor provides a reasonable level of confidence that the service requirements will be met by the proposed solution. The response demonstrates a good understanding of requirements. The evaluation panel gave a score of 5–8 for criteria evaluated by the procurement advisor as acceptable.

^ Solution services—the evaluation panel did not agree with the specialist advisor’s rating (acceptable) and gave a higher rating of ‘outstanding’ (10).

Queensland Audit Office.

Defining the contract deliverables

The contract was drafted predominantly with an outcomes-based focus (supported by minimum system requirements) because of the SaaS arrangement. While the contract was clear on the business outcomes SPER expected, SPER did not clearly define the operating model it would need to deliver these outcomes and expected the vendor to develop this.

As it was a co-sourced arrangement (partly in‑house and partly outsourced) clarity over SPER’s detailed functional requirements and the new operating model was critical to ensure both parties were aligned on the requirements. In a co-sourced arrangement like this, ideally the two parties would have co-designed the operating model prior to signing the contract for service provision.

It is clear from the significant variations to the contract and the many change requests SPER submitted to the vendor that SPER and the vendor had different expectations about what services and deliverables were required. The absence of a new operating model to define how the system would be used contributed to the misalignment of SPER’s and the vendor’s expectations for the project.

From SPER’s perspective, the vendor’s product did not meet its requirements. From the vendor’s perspective, SPER did not fully leverage the capabilities of its product and modify its business processes to work with its product.

A program health check report in October 2016 advised SPER to prioritise all activities involved in defining the gap between the baseline product functionality and SPER’s requirements.

A contract review conducted by a consulting firm in July 2018 stated that:

Given the outcome based nature of the original contract, it was not sufficiently clear or detailed in its requirements. This became more apparent during 2016 and 2017 as the capabilities of the technology and its ability to meet all business needs became clearer and a variety of policy and operating model changes took place.

About 15 months after SPER signed the contract with the vendor, SPER conducted a business process mapping exercise to define the requirements in detail. The original contract did not outline how and by when, SPER and the vendor would develop and agree on the detailed design specifications.

Even after the business process mapping exercise, the expectations of SPER and the vendor were not aligned. This made it difficult for SPER to hold the vendor responsible for not delivering according to its requirements—there was disparity between the contract specifications and its actual business requirements. SPER and the vendor exchanged over 300 change requests during the project, including 50 changes SPER requested during user acceptance testing. The volume and nature of the change requests shows what was originally defined in the contract did not match with what SPER or the vendor expected of the contractual arrangements. In SPER’s view, some of the change requests were also required to address system defects because the vendor insisted these matters be raised as change requests. SPER agreed with this so the issues could be resolved quickly. Other change requests from SPER were also required to address changes in legislative requirements during the project.

2. Governing the project

This chapter is about how well the State Penalties Enforcement Registry (SPER) governed and managed the information and communication technology (ICT) component reform program.

Introduction

To determine how well the program was governed, we assessed whether SPER:

- established effective project governance, with appropriately qualified and experienced resources to provide oversight of the ICT component of the SPER Reform Program. This includes the members of the governing board having sufficient skills in complex project management involving ICT, and utilising whole-of-government and external expertise to ensure a successful project

- ensured the governance board received reliable information from the project to enable it to monitor and manage the project risks and delivery

- implemented effective project assurance processes to enable those charged with governance to make informed project decisions or take timely actions to remediate any identified issues

- managed the performance of the contractor through effective contract management.

Composition of the program steering committee

Queensland Treasury set up the SPER program steering committee to govern the business transformation activities but it did not adequately consider how best to govern the ICT component. The program steering committee did not include members with sufficient qualifications and experience for the ICT component.

The steering committee placed too much reliance on its implementation advisor to bring the ICT expertise, even though this was not specifically in the advisor’s contract. The implementation advisor was originally engaged for skills and experience to develop business cases, and provide commercial and transformational program advisory services, not ICT. The steering committee included members who worked in SPER, had major projects knowledge, and represented Queensland Treasury. However, the steering committee members lacked the skills to govern delivery of the ICT component of the program.

We found several weaknesses with the composition of the project’s steering committee.

- Number of committee members: there were only two decision-making members in the steering committee for most of the project. This means there were not enough people to challenge decisions or provide independent advice. The two decision-making members were also responsible for business-as-usual activities, which limited their capacity to focus on the project.

- Structure of project governance:

- Project governance was not separate from organisational governance. The appointments to the steering committee were made based on their roles in Queensland Treasury rather than the skills and experience required to make the project a success.

- The steering committee did not establish a specialist sub-committee for the ICT component or include additional decision-making members who specialised in the ICT component.

- The steering committee operated autonomously for most of the project, but did provide verbal updates to the Under Treasurer. There was no formal reporting from the steering committee to the Under Treasurer or to the Audit and Risk Management Committee. Key risks raised in assurance reviews were not raised further than the steering committee.

- Composition of the program steering committee:

- The steering committee membership did not include representatives from Queensland Treasury ICT or specialist ICT resources.

- The Queensland Government Chief Information Office (QGCIO) was not involved as part of the steering committee until November 2018, when it started to attend meetings as an observer. QGCIO attended the meetings from November 2018 until the last meeting before the contract was terminated. It should be noted that QGCIO is an advisor to Queensland government agencies on ICT related projects and is not required to be on steering committees.

- There were no independent members on the steering committee. All members had a direct stake in the program. The project lacked a critical friend who could independently and objectively challenge decisions.

- Communication: there was no written evidence of regular communication and updates between the steering committee and the Under Treasurer. The Under Treasurers (multiple during this project) were not involved in the key decision-making processes and were involved only for approvals, where required. We were advised that the Senior Responsible Owner of the program provided verbal updates to the Under Treasurer.

The program did not have a plan to manage and communicate with stakeholders during the implementation, such as internal stakeholders from Queensland Treasury (Under Treasurer, and the Audit and Risk Management Committee) or external stakeholders who refer unpaid fines and penalties to SPER. While the program identified dependent organisations when it drafted the business case, it did not identify, document, and develop a plan to manage those stakeholders during implementation.

Operations of the program steering committee

The program steering committee was highly reliant on the advice and information provided to it by consultants and contractors, because of the skills gaps it had. It is unclear whether, or how effectively, the committee challenged the technical aspects of the information provided, because the meeting minutes mainly recorded outcomes.

The program steering committee underplayed some key risks during the project, such as the risk of project failure or that the vendor may not have the capability to meet its needs. The program manager’s consistent advice to the steering committee and the steering committee’s advice to the Under Treasurer was that the project had issues, but these issues would be resolved. Because the members of the steering committee were also responsible for selecting the vendor, overseeing the contract negotiations with the vendor, and managing the vendor during implementation, they lacked the objectivity to question their previous decisions.

The project operated as a silo within Queensland Treasury, but it did provide verbal updates to the Under Treasurer. The operating style at the time of this project was for the Commissioner of State Revenue and the SPER Registrar to operate with a fair degree of autonomy. Their respective statutory officers’ responsibilities are defined in legislation and they can execute their statutory functions independent of the Under Treasurer.